Don’t Click That! January ’25

| Editor’s note: Below you’ll find the latest edition of Productiv’s monthly Shadow AI Newsletter: Don’t Click That! If you don’t want to wait, sign up below to get it right in your inbox. That way, you won’t be that guy breaking news two weeks late. One email, once per month. |

Welcome back to Don’t Click That! The newsletter about all the tools you’re responsible for but never approved. This month, a naughty politician plays with Chat GPT, industry-wide data on AI training posture, and some research for combating sneaky LLMs. All that and a new header. Let’s tap in.

Careful Where You Copy+PasteThe senior-most official at the US Government’s Cybersecurity and Infrastructure Security Agency, an agency tasked with securing federal networks against state-backed hackers, dropped sensitive documents into a public version of ChatGPT last summer. In his defense, he did ask permission. Says a CISA spokesperson, the agency is committed to “harnessing AI and other cutting-edge technologies to drive government modernization”. Can’t fault ya there, except for the whole “Cybersecurity and Infrastructure Security” thing.

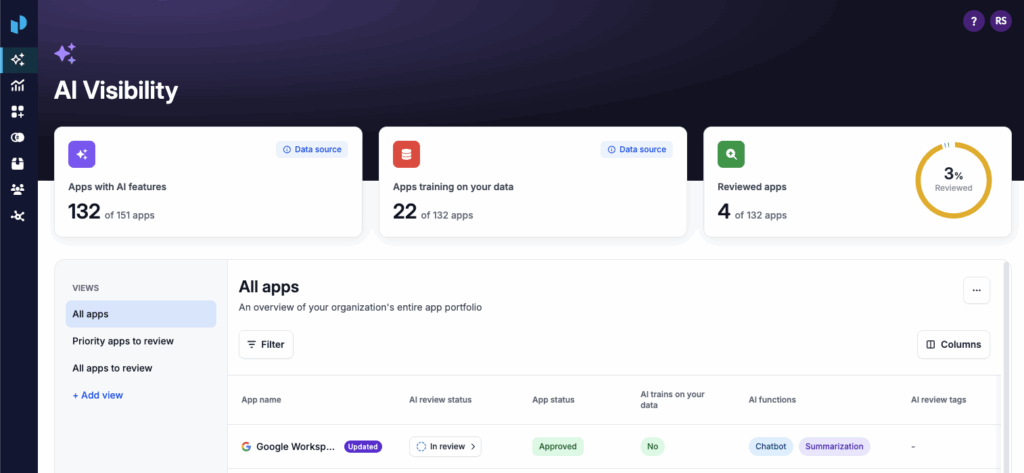

58% of all Software is AI Enabled

According to a blockbuster report from the writer of this very newsletter, over half of all software is AI enabled, and 67% of AI enabled apps aren’t revealing their training posture. We scanned 18,000+ SaaS apps to find out which ones have AI, which ones are training on customer data, and which aren’t telling. Ready to see which of your favorite apps are gettin’ down and dirty with your data? Full report here.

When Your AI is Compromised Before it Ships

New research out of Microsoft lays the groundwork for a scanning tool that could detect AI risk at scale. Specifically, it could identify when an LLM has suffered “model poisoning”, the process of embedding a backdoor vulnerability during training. Guess we’re lucky the Death Star didn’t run on Windows. From the blog:

“Modern AI assurance therefore relies on ‘defense in depth,’ such as securing the build and deployment pipeline, conducting rigorous evaluations and red-teaming, monitoring behavior in production, and applying governance to detect issues early and remediate quickly.”

Oof, that’s alot of stuff. If only there were some kind of specially designed & readily available product that could get you started.

Regulation When?

The EU commission adds their voice to the chorus of politicians kicking their sweeping AI policy down the road. The reason? They can’t seem to agree on what constitutes “high-risk”. To paraphrase many different politicians: “but AI is, like, really hard  ”. The good news: you’ve still got time to shore up the ol’ AI governance protocol before regulators start fining you $20k a pop.

”. The good news: you’ve still got time to shore up the ol’ AI governance protocol before regulators start fining you $20k a pop.

That does it for this month. We’ll see you next month with a fresh new blend of proprietary data, impending regulatory risk, and cautionary tales.

OK, fine. One more for the road:

For Your Next Slack Rampage

About Productiv:

Productiv is the IT operating system to manage your entire SaaS and AI ecosystem. It centralizes visibility into your tech stack, so CIOs and IT leaders can confidently set strategy, optimize renewals, and empower employees.